Hi all. Just a quick one today, bringing you up to date with a couple of new and interesting developments in the field of research rankings.

The first has to do with the sudden rise of “Open” bibliometric data. To date, all of the major research rankings have used data from one of the two major publishers: Elsevier or Clarivate (formerly known as Thompson Reuters). No surprise here: Elsevier’s Scopus and Clarivate’s Web of Science are the main collators of bibliometric data. The two collections aren’t exactly the same: Elsevier includes more journals from more countries, which makes it more “comprehensive” than Web of Science, but at the same time Elsevier pretty much by definition includes more low-quality journals too.

But in late 2022, these two options got a competitor, known as OpenAlex. It’s a pretty cool little platform, and you can read more about it here, but basically it’s a free bibliometrics database, which—provided you install a couple of plug-ins and spend a little bit of time getting to know its ins and outs—is a pretty neat open access alternative to the big databases. And last year, the Leiden Research Rankings (described before in the blog) which hitherto has been a faithful Web of Science user, began producing a version of its rankings using OpenAlex.

The launch of this new ranking is interesting for two reasons. The first is that it chose to produce a new set of rankings without ditching the old set, basically so that people could see the differences (and there are a few) between two sets of rankings using the same methodology but with two different data sets. The second is that the good folks at Leiden CWTS didn’t just do this for the current year(s)—they re-did their entire database going back to 2006, so it’s possible to track differences (if any) over time.

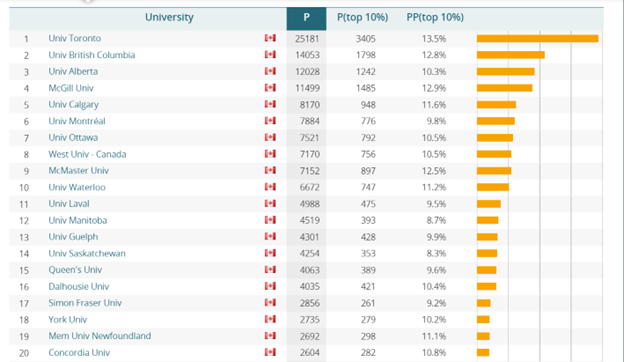

So how different are the two sets of results? Well, here are the Canadian results for the older Web of Science-based ranking:

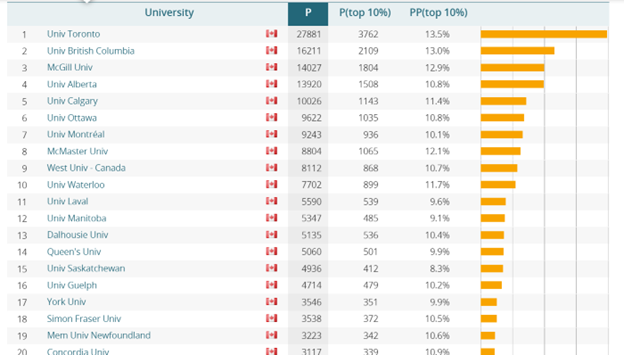

And here are the results of the Open Alex-based ranking:

As you can see, the two data sources result in almost identical rankings. Both the top ten and the top twenty institutions are identical in the two systems. Eighteen of the institutions have the same rank or move only place—the only exceptions are Dalhousie, which looks significantly better in the Open ranking than the traditional one, and Guelph which looks significantly worse. There are of course additional differences if you dig a little deeper into the data, but the point is provided you aren’t squinting too hard, the two databases end up giving you more or less the same result.

It seems that for now, CWTS Leiden plans on running both sets of results in parallel (it released a new set of Web of Science-based rankings in July, several months after debuting its open rankings). But this might be the first time I’ve ever seen a ranking introduce a methodology change but continue with the old methodology just so people can what difference—if any—the methodological difference makes. Bravo.

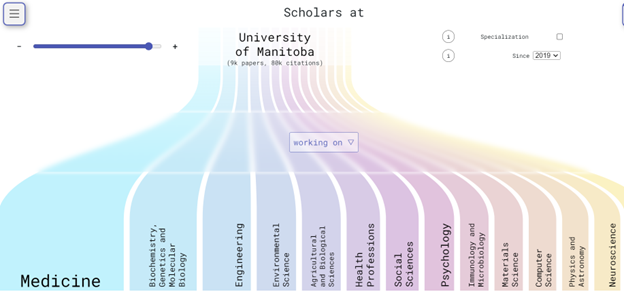

Meanwhile, OpenAlex is being used in another way by a Hungarian team based mainly at Corvinus University in Hungary. This effort is called Rankless and as the name suggests, it’s not a ranking per se but rather a type of data visualization tool which allows you to look through individual institutional research profiles one at a time. Here is a snapshot of the University of Manitoba’s research outputs by field over the past five years.

Kind of cool, right? But also difficult to use for comparative purposes since you can only generate one of these at a time. It also very definitely needs more documentation (it’s not clear what you’re seeing half the time, and the difference between showing the data in “specialization” and non-specialization mode is deeply fuzzy).

Anyway, I doubt that OpenAlex is likely to widely supplant Clarivate or Elsevier any time soon, but if it is democratizing access to better scientific and bibliometric data, that’s a good thing and well worth monitoring in the years to come.

Tweet this post

Tweet this post

In that final image, are humanities off the edge of the graph? Or do they not count as research? Or does nobody at U Manitoba ever publish on, like, Hamlet?

The fine print on that graphic says “9k papers, 80k citations”, so my guess is that the width of the bars is proportional to the citations received (AKA “impact”). The nature of humanities publications are really the limiting factor here: the rate of publication is slow, the publications themselves typically contain fewer references (than a STEM paper), and they are less likely to be indexed (especially if they are monographs). So while we can all agree that the classics are a core part of the raison d’être of a university, it is tricky to measure that.

Quite so. I think the moral of the story is that such measures of research should rather be considered indexes of institutional commitment to STEM.