Spotlight

Wow. It’s already been a week since AI-CADEMY, and I’m still hung up on all the vibrant discussions that happened in Calgary, the insights shared by all, and the palpable eagerness to learn and collaborate. If you haven’t already, make sure to read Alex’s Wednesday blog for a quick summary (and if you attended, there are more post-event follow-ups coming your way soon) – but on a more personal note, I want to express how much of a privilege it was to be able to take part in this convening effort, and I wish to thank everyone who attended, presented, sponsored, or supported the event in one way or another.

Now, back to current affairs.

If you are not yet caught up, we’ve now entered a “new” chapter – Agentic AI. Agentic AI systems “can be instructed to plan and directly execute complex tasks with only limited human involvement”. While they might significantly increase operational efficiency, they also exacerbate the risks that we’ve grown to be quite aware of through GenAI tools (hallucinations, biased outputs, leakage of private data, etc.). Agentic AI systems “also present new risks that stem specifically from their agentic properties, i.e., underspecification, directness of impact, goal-directedness, and long-term planning. […] While chatbots often cause harm by users acting upon model outputs […], Agentic AI systems can directly cause harm (e.g., autonomously hacking websites). […] Additionally, as Agentic AI systems undertake more complex and long-horizon tasks, with limited human oversight, users are likely to repose greater trust in those systems, potentially developing asymmetric relationships of dependance”. These quotes, as well as much more information, come from a recent paper published by a team of researchers from the MIT, Stanford University, Harvard and more. They introduce the AI Agent Index, the first public database where they document existing system’s components, application domains and risk management practices (see an example here) The researchers divided the 67 reviewed Agentic AI systems into six categories:

– Softwares: Agents that assist in coding and software engineering

– Computer use: Agents designed to open-endedly interact with computer interfaces

– Universal: Agents designed to be a general-purpose reasoning engine

– Research: Agents designed to assist with scientific research

– Robotics: Agents designed for robotic control

– Other: Systems designed for other niche applications

So, yeah. Things are moving, and they are moving fast – or at least, on the side of industry. In postsecondary, not so much… yet. While thinking of a world with autonomous AI Agents can for sure cause a few nightmares, let’s not do the common mistake of letting our fear keep us from avoiding learning about it altogether.

Next, a little less recent but still coming straight up from MIT, I thought I’d share again the AI Risk Repository. This repository includes first an AI risk database, then a causal taxonomy of AI risks, and finally a domain taxonomy of AI risks. This open access database is frequently updated, so I’d recommend to both institutions and decision-makers to use it to monitor emergent risks, take action to mitigate them, and develop risk-informed educational material.

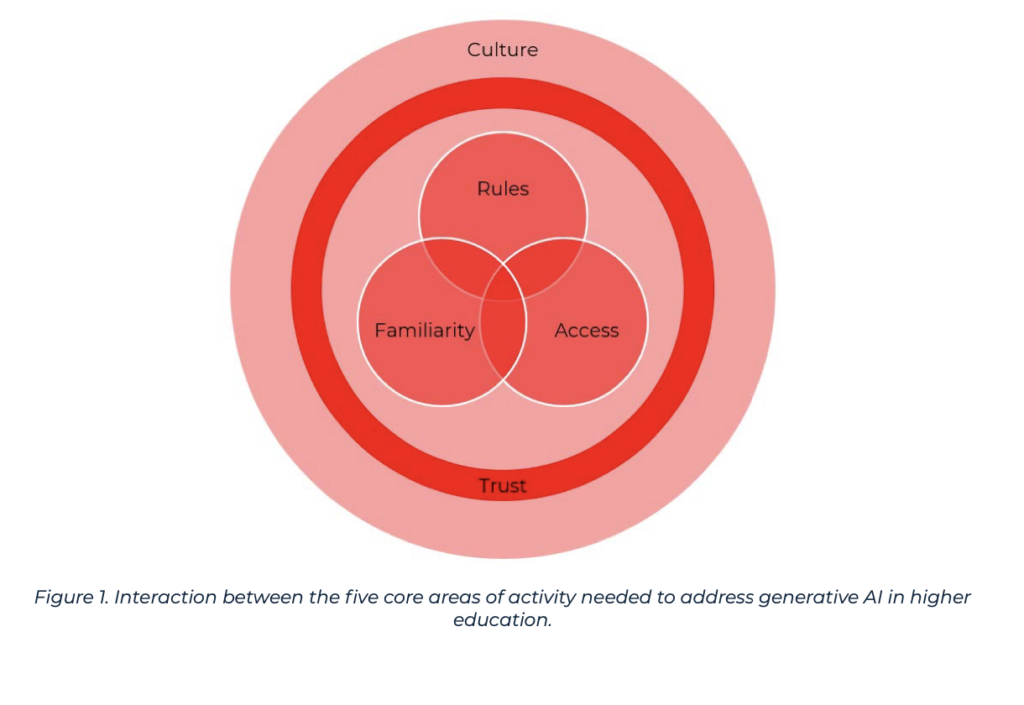

And a final piece for today – Earlier in 2025, our colleague Simon Bates, Vice-Provost and Associate Vice-President for Teaching and Learning at UBC, alongside Danny Y. T. Liu, Professor of Educational Technologies at the University of Sydney, released the paper Generative AI in higher education: Current practices and ways forward. While the whole thing is worth a read, two elements particularly resonated with me. The first is their CRAFT Framework, where they highlight immediate key areas of activity (see below).

They elaborate on why each component matters, and provide case studies.

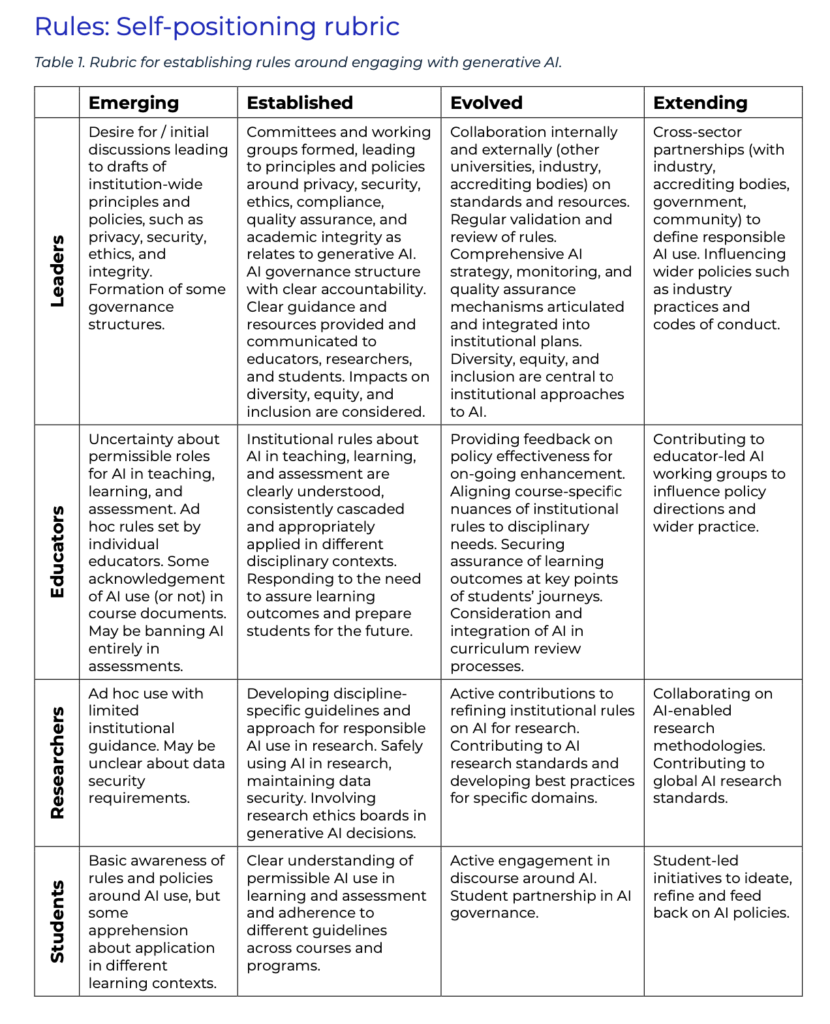

They also provide self-positioning rubrics, which are the second thing that caught my eye. You can find an example below – but they have one for each component of the CRAFT Framework. If your leadership, board, or AI working group is looking for a good conversation-starter to assess the current state of things at your institution – this is it.

There’s probably enough content in here to keep you busy for a little while, so I’ll stop here for now. Talk more in the next edition of the AI Observatory blog!

– Sandrine Desforges, Research Associate

Additional Resources

International Frameworks

With the right opportunities we can become AI makers, not takers

Michael Webb. FE Week. February 21, 2025.

The article reflects on the UK’s AI Opportunities Action Plan, aiming to position the country as a leader in AI development rather than merely a consumer. It highlights the crucial role of education in addressing AI skills shortages and emphasizes the importance of focusing both on the immediate needs around AI literacy, but also with a clear eye on the future, as the balance moves to AI automation and to a stronger demand for uniquely human skills.

Living guidelines on the responsible use of generative AI in research : ERA Forum Stakeholder’s document

European Commission, Directorate-General for Research and Innovation. March 2024.

These guidelines include recommendations for researchers, recommendations for research organisations, as well as recommendations for research funding organisations. The key recommendations are summarized here.

Industry Collaborations

OpenAI Announces ‘NextGenAI’ Higher-Ed Consortium

Kim Kozlowski. Government Technology. March 4, 2025.

OpenAI has launched the ‘NextGenAI’ consortium, committing $50M to support AI research and technology across 15 institutions, including the University of Michigan, the California State University system, the Harvard University, the MIT and the University of Oxford. This initiative aims to accelerate AI advancements by providing research grants, computing resources, and collaborative opportunities to address complex societal challenges.

AI Literacy

A President’s Journey to AI Adoption

Cruz Rivera, J. L. Inside Higher Ed. March 13, 2025.

José Luis Cruz Rivera, President of Northern Arizona University, shares his AI exploration journey. « As a university president, I’ve learned that responsible leadership sometimes means […] testing things out myself before asking others to dive in ». From using it to draft emails, he then started using it to analyze student performance data and create tailored learning materials, and even used it to navigate conflicting viewpoints and write his speechs – in addition to now using it for daily tasks.

Teaching and Learning

AI Tools in Society : Impacts on Cognitive Offloading and the Future of Critical Thinking

Gerlich, M. SSRN. January 14, 2025.

This study investigates the relationship between AI tool usage and critical thinking skills, focusing on cognitive offloading as a mediating factor. The findings revealed a significant negative correlation between frequent AI tool usage and critical thinking abilities, mediated by increased cognitive offloading. Younger participants exhibited higher dependence on AI tools and lower critical thinking scores compared to older participants. Furthermore, higher educational attainment was associated with better critical thinking skills, regardless of AI usage. These results highlight the potential cognitive costs of AI tool reliance, emphasising the need for educational strategies that promote critical engagement with AI technologies.

California went big on AI in universities. Canada should go smart instead

Bates, S. University Affairs. March 12, 2025.

In this opinion piece, Simon Bates, Vice-Provost and Associate Vice-President for Teaching and Learning at UBC, reflects on how the ‘fricitonless efficiency’ promised by AI tools comes at a cost. « Learning is not frictionless. It requires struggle, persistence, iteration and deep focus. The risk of a too-hasty full scale AI adoption in universities is that it offers students a way around that struggle, replacing the hard cognitive labour of learning with quick, polished outputs that do little to build real understanding. […] The biggest danger of AI in education is not that students will cheat. It’s that they will miss the opportunity to build the skills that higher education is meant to cultivate. The ability to persist through complexity, to work through uncertainty, to engage in deep analytical thought — these are the foundations of expertise. They cannot be skipped over. »

We shouldn’t sleepwalk into a “tech knows best” approach to university teaching

Mace, R. et al. Times Higher Education. March 14, 2025.

The article discusses the increasing use of generative AI tools like among university students, with usage rising from 53% in 2023-24 to 88% in 2024-25. It states that instead of banning these tools, instructors should ofcus on rethinking assessment strategies to integrate AI as a collaborative tool in academic work. The authors share a list of activities, grounded in the constructivist approach to education, that they have successfully used in their lectures that leverage AI to support teaching and learning.

Accessibility & Digital Divide

AI Will Not Be ‘the Great Leveler’ for Student Outcomes

Richardson, S. and Redford, P. Inside Higher Ed. March 12, 2025.

The authors share three reasons why AI tools are only deepening existing divides : 1) student overreliance on AI tools; 2) post-pandemic social skills deficit; and 3) business pivots. « If we hope to continue leveling the playing field for students who face barriers to entry, we must tackle AI head-on by teaching students to use tools responsibly and critically, not in a general sense, but specifically to improve their career readiness. Equally, career plans could be forward-thinking and linked to the careers created by AI, using market data to focus on which industries will grow. By evaluating student need on our campuses and responding to the movements of the current job market, we can create tailored training that allows students to successfully transition from higher education into a graduate-level career. »

More Information

Want more? Consult HESA’s Observatory on AI Policies in Canadian Post-Secondary Education.

This email was forwarded to you by a colleague? Make sure to subscribe to our AI-focused newsletter so you don’t miss the next ones.

Tweet this post

Tweet this post