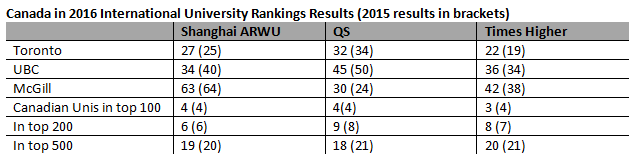

So, the international rankings season is now more or less at an end. What should everyone take away from it? Well, here’s how Canadian Universities did in the three main rankings (the Shanghai Academic Ranking of World Universities, the QS Rankings and the Times Higher Rankings).

Basically, you can paint any picture you want out of that. Two rankings say UBC is better than last year and one says it is worse. At McGill and Toronto, its 2-1 the other way. Universities in the top 200? One says we dropped from 8 to 7, another says we grew from 8 to 9 and a third says we stayed stable at 6. All three agree we have fewer universities in the top 500, but they disagree as to which ones are out (ARWU figures it’s Carleton, QS says its UQ and Guelph, and for the Times Higher it’s Concordia).

Do any of these changes mean anything? No. Not a damn thing. Most year-to-year changes in these rankings are statistical noise: but this year, with all three rankings making small methodological changes to their bibliometric measures, the year-to-year comparisons are especially fraught.

I know rankings sometimes get accused of tinkering with methodology in order to get new results and hence generate new headlines, but in all cases, this year’s changes made the rankings better, either making them more difficult to game, more reflective of the breadth of academia, or better at handling outlier publications and genuine challenges in bibliometrics. Yes, the THE rankings threw up some pretty big year-to-year changes and the odd goofy result (do read my colleague Richard Holmes’ comments the subject here) but I think on the whole the enterprise is moving in the right direction.

The basic picture is the same across all of them. Canada has three serious world-class universities (Toronto, UBC, McGill), and another handful which are pretty good (McMaster, Alberta, Montreal and then possibly Waterloo and Calgary). 16 institutions make everyone’s top 500 (the U-15 plus Victoria and Simon Fraser but minus Manitoba, which doesn’t quite make the grade on QS), and then there’s another half-dozen on the bubble, making it into some rankings’ top 500 but not others (York, Concordia, Quebec, Guelph, Manitoba, Concordia). In other words, pretty much exactly what you’d expect in a global rankings. It’s also almost exactly what we here at HESA Towers found when doing our domestic research rankings four years ago. So: no surprises, no blown calls.

Which is as it should be: universities are gargantuan, slow-moving, predictable organizations. Relative levels of research output and prestige change very slowly; the most obvious sign of a bad university ranking is rapid changing of positions from year to year. Paradoxically, of course, this makes better rankings less newsworthy.

More globally, most of the rankings are showing rises for Chinese universities, which is not surprising given the extent to which their research budgets have expanded in the past decade. The Times threw up two big surprises; first by declaring Oxford the top university in the world when no other ranker, international or domestic, has them in first place in the UK, and second by excluding Trinity College Dublin from the rankings altogether because it had submitted some dodgy data.

The next big date on the rankings calendar is the Times Higher Education’s attempt to break into the US market. It’s partnering with the Wall Street Journal to create an alternative to the US News and World Report rankings. The secret sauce of these rankings appears to be a national student survey, which has never been used in the US before. However, in order to get a statistically significant sample (say, the 210-students per institution minimum we used to use in the annual Globe and Mail Canadian University Report) at every institution currently covered by USNWR would imply an astronomically large sample size – likely north of a million students. I can pretty much guarantee THE does not have this kind of sample. So I doubt that we’re going to see students reviewing their own institution; rather, I suspect the survey is simply going to ask students which institutions they think are “the best”, which amounts to an enormous pooling of ignorance. But I’ll be back with a more detailed review once this one is released.

Tweet this post

Tweet this post