I am getting pretty sick of AI hype. It’s not that I think AI is without value or a mirage or anything, but I think people are getting weirdly reluctant to challenge even the most obviously nonsensical claims about the industry. But, since apparently others don’t seem to want to play skeptic on this, I guess I’m “it”. So here goes:

My critique of current AI-mania is basically three-fold.

- The Term Artificial Intelligence is Being Stretched Beyond Meaningful Use

This is a point maybe best made by Ian Bogost in the Atlantic a few months ago. The term “artificial intelligence” used to mean something sentient, self-aware, or at the very least autonomous. Nowadays it means nothing of the sort; pretty much anything operating on an algorithm analyzing large amounts of data gets described as AI. It’s a term that companies use to hype their new products – but usually, when you see the term “AI” there is very little intelligence actually involved. Or even necessarily very much learning. It’s often just a label slapped on an ordinary tech project for the purpose of attracting the attention of VCs.

- Lab Success Does Not Equal Commercial Success.

A few weeks ago on twitter my friend Joseph Wong of the University of Toronto pointed out that the way we are talking about and hyping AI bears more than a passing resemblance to the way we talked about biotech in the early 1990s (and he should know, having authored a rather excellent book on the subject). There have certainly been some impressive recent gains in the way machines learn to recognize specific types of patterns (in particular, visual ones) or tackle certain types of very specific problems (beating humans at Chess, Go, etc.). These are not trivial accomplishments. But getting from there to actual products that affect the labour market? That’s totally different.

I think you can basically boil the problems with putting useful AI on the market into two broad categories. The first is a familiar one in computer science and it’s called GIGO – or “garbage in, garbage out”. Basically, the promise of AI is that if you give a particular type of algorithm a large enough data set, it will begin to see and identify correlations sufficiently well as to identify/predict X based on Y at least as often as a human does. But that assumes that Y is actually labelled correctly. In computerized games, that’s not a problem. Up to a certain level of detail on images, it’s not a problem. But that’s not always the case; many data sets are messy or highly contextual. Remember the story about the AI program that could “tell” a person’s sexuality from a photograph? Turns out the photographs were from a dating site, a rather restricted sample wherein people presumably had a reason to play up certain aspects of their sexuality. Would the same AI work with passport photos? Probably not. But a lot of AI hype is based on computers using contextualized data like this and assuming it will work in real-life, free-flowing, non-contextualized situations. And the evidence for that is not especially good.

The second is simply that even if you have clean data sets and you have AI that recognizes patterns well, the fact is that no one is even close to have come up with pattern recognition that works in more than one domain. That is to say, there is no such thing as “generalized” artificial intelligence. You basically have to train the little bastards up from nothing on every single new type of problem. And that’s a problem as far as commercialization is concerned. If we have to pay a bunch of computer scientists a crapload of money to design and clean databases, work out and laboriously verify the accuracy of pattern recognition in every individual field of human endeavour, the likelihood of commercializing this stuff in a big way anytime soon is pretty low. (Kind of like biotech in the 90s, if you think about it).

And that’s before you even stop to think about the legal ramifications, which I think is a vastly underrated brake on this whole thing. Remember five years ago when people predicted millions of autonomous vehicles on the streets by 2020? One of the main barriers to that is simply the fact that legal liability in the case of autonomous agents is unclear. If a driverless van sideswipes your car, who do you sue? The owner? The manufacturer? The software developer? It could be decades before we sort this stuff out – and until we do there is going to be a massive brake on AI commercialization.

- The Canada Hype

Paul Wells recently noted that at a conference, when Yoshua Bengio commented that the reason Canada was good at AI was because back in the day, Canada spent a lot of money on stuff that wasn’t seen as trendy at the time, the main reaction of the Ottawa crowd seemed to be “Yeah! More money for AI now that it’s trendy!”. Which sort of sums up Ottawa’s entire approach to innovation, you ask me. But there’s another aspect of the AI debate in Canada which is odd, and that’s the insistence that we have some kind of global leadership role.

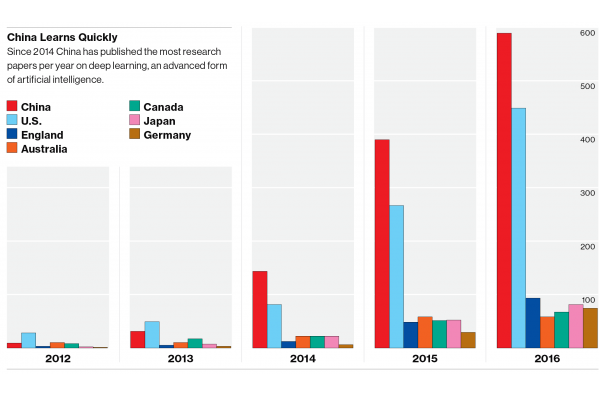

Now there’s no doubt we’re housing some top AI talent: there’s Bengio in Montreal, Geoffrey Hinton in Toronto and Richard Sutton in Edmonton to name but three. But have you ever noticed that nearly all the AI hype about Canada seems to come from within Canada? You don’t often see trade publications from outside Canada talking about it (the MIT Technology Review, for instance, has been entirely silent on this apart from when Facebook opened its new research centre in Montreal). And maybe there is good reason for that. Check out this infographic from the same MIT Technology Review, showing the evolution of research papers on “deep learning”, which is precisely the field in which Canada is supposed to be dominant:

I think what that data shows is that Canadian researchers are doing good solid work in deep learning. But it hardly suggests “leadership”. Perhaps that, too, is being overhyped for political if not commercial reasons.

To sum up: there is a lot of interesting work going on in artificial intelligence these days. Some of it is even going on in Canada. But the claim that AI is about to change the economy is very similar to that made a few years ago about how MOOCs would wipe out universities within a decade. It’s being pushed either by very naïve, open-mouthed techno-gawkers who believe that simply because something can happen it will, or by people with a commercial or political agenda which is not necessarily in the public interest. Be wary.

Tweet this post

Tweet this post

Your interesting article on AI is loosely (very loosely) related to this piece, which may interest your readers.

https://theconversation.com/is-there-too-much-emphasis-on-stem-fields-at-universities-86526

Paul Axelrod

Missed opportunity to title this Artificial Artificial Intelligence Intelligence?

I’m sure you’re right, but you could generalise to trends generally, and from thence to an argument about the folly of trying to predict the future.

That government and administrations become transfixed by such trends is all the more reason that we should, rather than trying to follow their efforts at strategy or just generally picking winners, simply buckle-down to lives of curiosity-driven research.