Further to Tuesday’s blog about STEM panics, I note a new report out from Canada 2020, a young-ish organization with pretensions to be the Liberals’ pet think tank called Skills and Higher Education in Canada: Towards Excellence and Equity. Authored by the Conference Board’s Daniel Munro, it covers most of the ground you’d expect in a “touch-all-the-bases” report. And while the section on equity is pretty good, when it comes to “excellence” this paper – like many before it – draws some conclusions based more on faith than facts.

Take for example, this passage:

Differences in average literacy skills explain 55 per cent of the variation in economic growth among OECD countries since 1960. With very high skills and higher education attainment rates, it is not surprising to find Canada among the most developed and prosperous countries in the world. But with fewer advanced degree-holders (e.g., Masters and PhDs), and weak performance on workplace education and training, it is also not surprising to find that Canada has been lagging key international peers in innovation and productivity growth for many years.

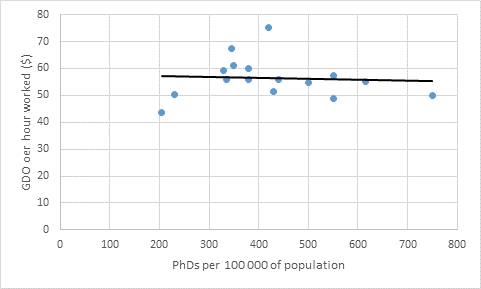

The first sentence is empirically correct, but things head south rapidly from there. The average literacy rates do not necessarily imply, as the second sentence suggests, that higher education attainment rates are a cause of prosperity; Germany and Switzerland do OK with low rates, and Korea’s massive higher education expansion was a consequence rather than a cause of economic growth. The final sentence goes even further, implying specifically that the percentage of the population with advanced degrees is a determinant of productivity growth. This is flat-out untrue, as the figure below shows.

Figure 1: Productivity vs. PhDs per 100K of population, select OECD countries

Countries in this graph: US, UK, NL, NO, BE, CH, D, SE, FI, O, DK, UK, US, IE, FR, CA, JP

The pattern is one you see in a lot of reports: find a stat linking growth to one particular educational attainment metric, then infer from this that any increase on any educational metric must produce growth. It sounds convincing, but it usually isn’t true.

It’s the same with STEM. Munro tells us Canada has a higher share of STEM of university graduates than the OECD average (true – and something we rarely hear), but also intones gravely that Canada lags “key international competitors” like Finland and Germany – which is simply nonsensical. Our competitive economic position is in absolutely no way affected by the proportion of STEM grads in Finland (it’s Finland, for God’s sake. Who cares?); as for Germany, since they have a substantially lower overall university attainment rate than Canada, so our number of STEM grads per capita is still higher than theirs (which is surely a more plausible metric as far the economy is concerned.

I don’t want to come across here as picking on Munro, because he’s hardly the only person who makes these kinds of arguments; such dubious assumptions underpin a lot of Canadian reports on education. We attempt – for the most part admirably – to benchmark our performance internationally, but then use the results to gee up politicians for action by drawing largely unwarranted conclusions about threats to our competitive position if we aren’t in the top few spots of any given metric. I don’t doubt these tactics are well-meant (if occasionally a bit cynical), but that doesn’t make them accurate.

The fact is, there are very few proven correlations between attainment metrics in education and economic performance, and even fewer where the arrow of causality runs from education to growth (rather than vice-versa). If we have productivity problems, are they really related to STEM? If they are related to STEM, which matters more – increasing STEM PhDs, or improving STEM comprehension among secondary school students?

We have literally no idea. We have faith that more is better, but little evidence. And we should be able to do better than that.

Tweet this post

Tweet this post

I think the problem is more general than one that will be solved with more stats: this is, in fact, where we’re falling into the false claim that bigger is always better. What we need aren’t more statistics and bigger databases, not if we’d just use them to find an illusory cause-and-effect relationship. On the contrary, we should abandon the search for causal relationships. Cause and effect are hopelessly intertwined in a culture that values learning, just as they are in an anti-intellectual culture.

We get ourselves into trouble by trying to be teleological. By choosing a discrete, measurable goal and then pursuing means to that end, we risk choosing the wrong goal or incoherent means or both. Worse, we’re likely to undermine what clearly works: hard work within the basic structure of a liberal education, reinforced by the pursuit of basic, curiosity-led research.

Let’s move to an example. There is no cause and effect link between (say) playing the piano and being good at physics. Looking for one only tempts us to abandon both the piano and physics in favour of something that will have the same effect without all the effort. Enough of quick-fix solutions, based on cause-and-effect reasoning! The Soviets tried such things, and failed miserably. Our time calls for a fostering of the erudition of free men and women.